It's about a computational approach to derive sub-optimality guarantees.

You want to know how much the solution can be trusted . . .

The Central Idea

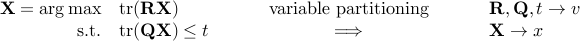

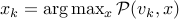

Let  be an optimization problem structure with given and optimization variables partitioned as (v,x).

be an optimization problem structure with given and optimization variables partitioned as (v,x).

Example:

Now suppose  is a “difficult” optimization problem; however,

is a “difficult” optimization problem; however,

A sequence

of

of  can be constructed such that the associated global optima of the problem, viz.

can be constructed such that the associated global optima of the problem, viz.  are known for any

are known for any  , and the “distance” between

, and the “distance” between  and

and  , is decreasing with

, is decreasing with  .

.

A sub-optimality guarantee of the obtained solutions

can be efficiently computed using the distance between

can be efficiently computed using the distance between  and

and  .

.

Then, computational sub-optimality guarantees can be obtained along with the approximate solutions, that might

outperform existing analytically derived sub-optimality guarantees, or

be the only class of sub-optimality guarantees in cases where no a priori known analytical guarantees are available for the given problem.

|

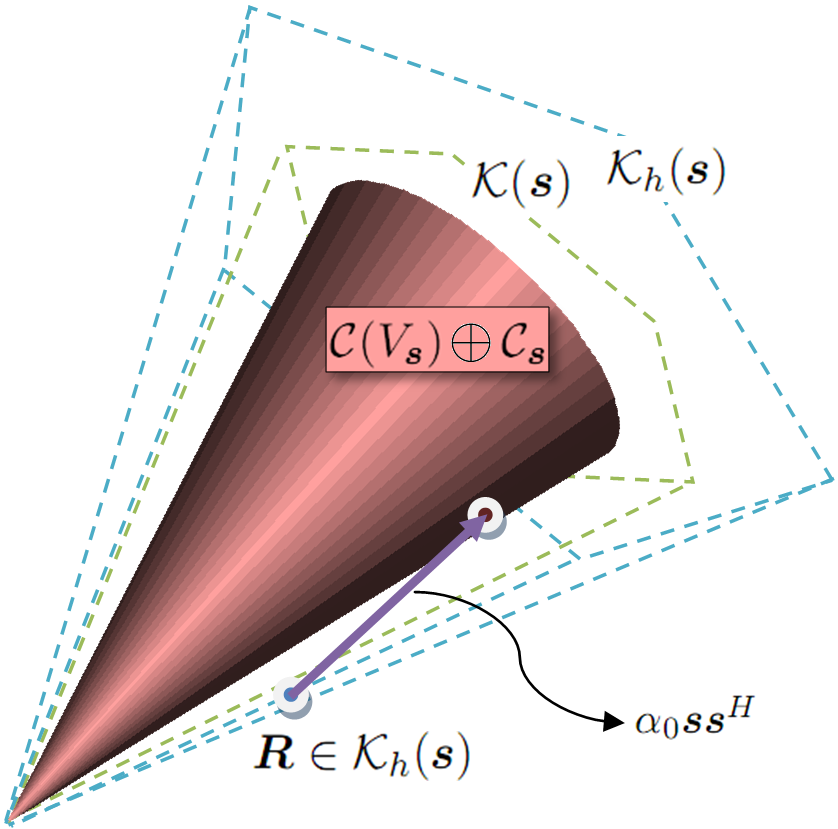

An illustration of the cone approximation technique used for MERIT's derivation in unimodular quadratic programming. See Applications for details. |